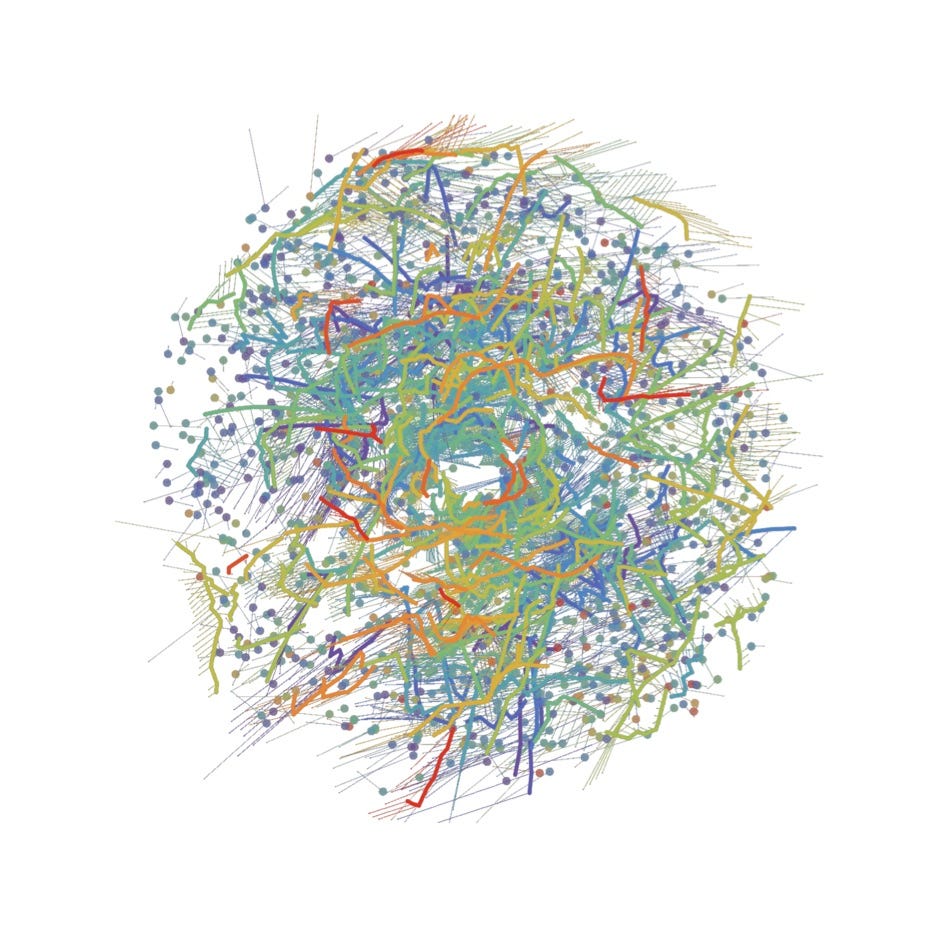

Trust me, there is a black hole in here

why we need interpretability if we want to apply machine learning to astronomy

Recently there has been lots of interest in explainable artificial intelligence, aka XAI. As with any trend in the broader machine learning community, astronomers applying machine learning to astronomical problems will follow suit soon. But at this stage we are still often applying machine learning methods for the first time to new datasets, so interpretability and explainability -which often get conflated- are mostly an afterthought.

The scenario in XAI is typically as follows: we have some black box algorithm that makes predictions in mysterious ways, mostly because of its complexity. Its predictions affect people and, understandably, people want explanations. Even the law may demand that explanations be provided. Usually at this point the newly introduced `right to explanation’ EU legislation is brought up. So a vast field of research was spawned by the sudden need to explain black boxes. Lots of clever schemes were devised, from Shapley values to counterfactual examples to anchors ...

Yet the whole explainability mindset has been the object of strong criticism. A radical point of view is that explanations simply cannot be good enough. After all, if an explanation were really good we could use the explanation itself directly for prediction -rather than the black box. So why train a black box in the first place? Thus, in a sense, explanations are defective by design. Yet it seems that -at least from what I see in astronomy- as the last few years saw a progressive increase in the popularity of black boxes, the next few years will see the rise of explanations.

The idea that we can build black boxes and then explain their behavior is obviously appealing to people that work in the field, including academics. One reason is simply that you get double the number of jobs that you would with black boxes alone: one job position for the black box trainer, one for the black box explainer. And even in academia, having more work to do means more papers. One paper for training the black box and one for explaining it equals two papers and one (self-) citation.

The other –related- reason is prestige: the black box is a magic oracle that I built using the powerful tools of AI. Explaining its decisions does not detract from the mystery as long as the explanations do not cover the whole feature space –as is the case with local surrogate models- or are not fully accurate –so we are still ultimately depending on the black box.

On the other hand, a natively interpretable model loses most of the charme of the black box: it is just a set of rules, it is just a scoring system. Pretty much like a painting by Mondrian is just a bunch of rectangles filled with bright colors. My five year old could have made that, right? Wrong. Few will notice that without a sophisticated search algorithm we would not have found that optimal combination of rules that looks so simple after the fact. Fewer still will see that all of this is built on top of clever feature engineering, and anyway feature engineering by humans is already regarded as passé. So my bet is that both the black boxes and their explanations are here to stay, even though this may be bad news for science.

At its core, science has always been about convincing people. The rigorous web of logical deductions that constitutes the backbone of math and of all exact sciences, is –ultimately- a rhetorical device. Heck, there is even speculation that historically it developed from rhetorics. If you and me agree on some rule to deduce B from A and then I show you that A holds, then you must agree with me that B also holds. I convinced you. And you can be convinced by a proof without having to trust me: actually science works best when taken with a healthy dose of skepticism. Proofs are the ultimate glass box.

With this in mind, it becomes easy to see how black boxes cannot possibly have a central place in science, but should be devoted to accessory tasks such as data preparation. The whole point of science is to understand nature by building models that can be taken apart and criticized piece by piece. A classifier built by averaging thousands of decision trees trained on hundreds of features –a large fraction of which may be irrelevant- is the opposite of that. Tacking a partial explanation on top of it may make you feel like you understood why the black box cast its prediction, but can you be sure? Were you convinced by a watertight argument or are you just accepting an explanation based on trust?

Come to think of it, science tries to avoid appeals to authority not because of some intrinsic rebelliousness, but precisely because authority is a black box. If you believe that something holds because George Djorgovski wrote it, Djorgovski is your black box. If you do not try to replicate someone’s findings because they were published in a reputable journal, that someone is your black box - it does not matter whether they are a mammal or they live in a GPU.

Hi Mario,

great to read your blog, quite a pretty job you are doing!

The issue you raise about machine learning is really true. When I first heard about machine learning and physics, I thought that by definition it is the very contrary of science. Science is about using our intelligence to nail down some specific observations to a simple model that starts from a given fact and gets to the observation. For instance: I could train my machine-learning algorithm by observing the tides of several see locations around the world and probably at the end it would be able to predict the tides of a given uncharted location. This might be great from an engineering viewpoint: as a scientist, I think that we understand more about tides once we make the connection with the Moon, the Sun, the Newtonian gravity and the laws of water flow. And we will not be significantly wiser, without understanding that!

However, the more I think about it, the more I have the impression that machine learning is just another numerical tool. We can use it to guide our intuition, but at the end we need to prove the results it gives us, at least partially. I do my research activity in condensed-matter, and I’m fine if machine learning tells to my colleague (I don’t do that) that in a condensed-matter model there are three very exotic quantum phases of matter, but then he (we?) should be able to understand those phases and link them with pen and paper to the original model. I feel that only at that stage we have produced knowledge. I feel that only at that stage we have identified the key properties of the model, and that somebody could use them to see what happens when I perturb the model by adding, for instance, an electromagnetic field. Many people might be happy with the machine-learning result, I feel it is just a hint.

I have an observation concerning the point where you say: “At its core, science has always been about convincing people. The rigorous web of logical deductions that constitutes the backbone of math and of all exact sciences, is –ultimately- a rhetorical device.” I have the impression that here you are biased by your research on black holes in complex astrophysical objects. I think that science is about producing models that are able to predict the behavior of nature under certain conditions. It’s not just about rhetoric, it’s also about numbers and data!

Leonardo