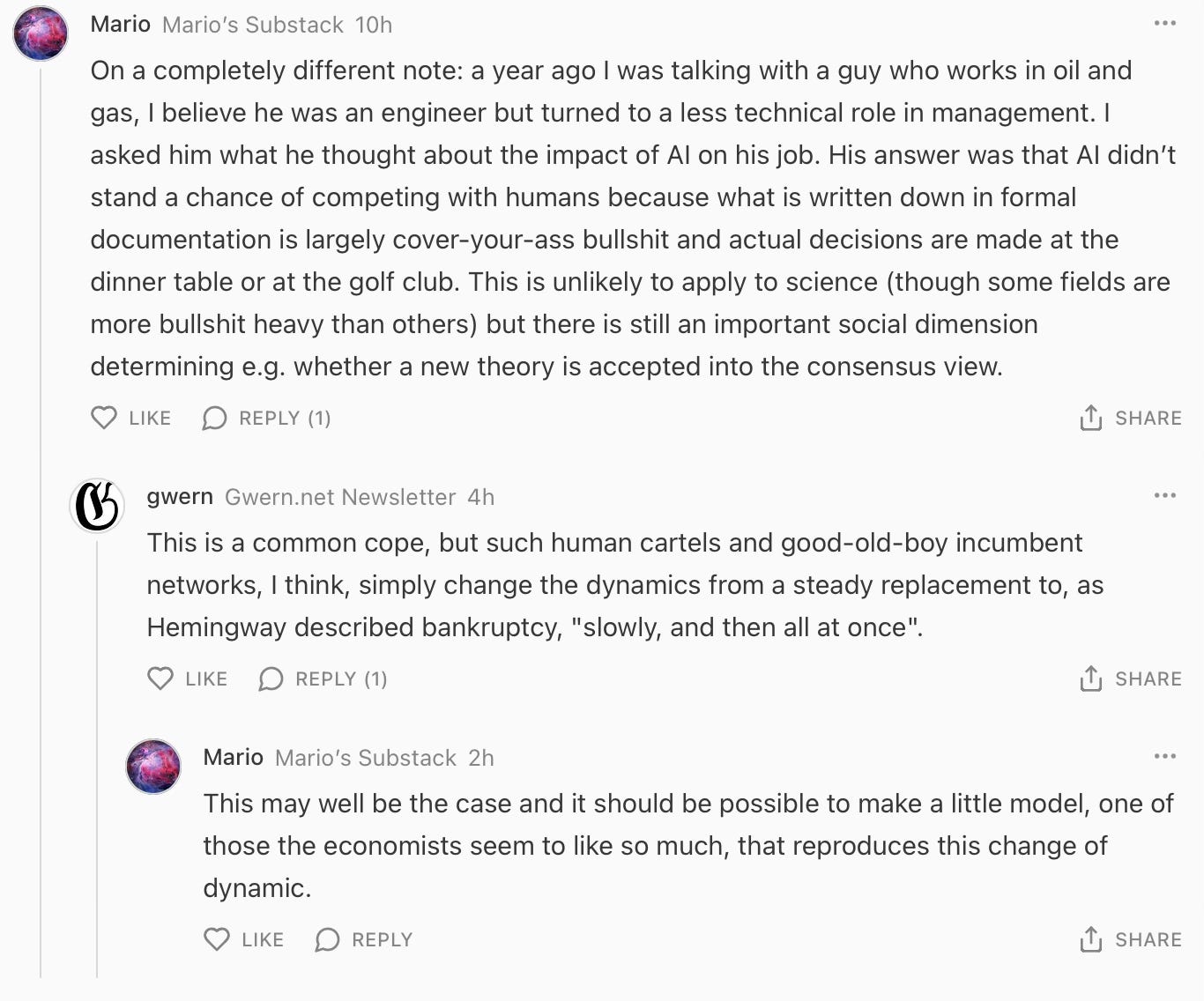

I have recently experienced just how good chatGPT 4.5 is. It’s been a couple of years that people are joking that they could just replace students or postdocs with instances of chatGPT (or maybe of DeepSeek) but it does not look like anyone is taking this too seriously at the moment. Perhaps the reason is the one discussed here, namely that the illegibility of the tasks a student has to string together to actually get research done is a key obstacle. When I chimed in, an exchange with Gwern followed: here is a screenshot from the comment section:

So let’s make a little model, alright?

Imagine that a company1 employs humans for a certain fraction of the tasks it has to carry out, and uses AI for the rest. The average cost per task will be

where p is the fraction of the tasks assigned to AI, cAI and cH are the costs per task of AI and humans respectively. We will break down the costs per task of AI into a constant term plus a term representing additional “illegibility costs” due to the need to interact with humans. So

with the assumption that the illegibility cost term scales linearly with the fraction of human labor still involved in carrying out the company’s tasks and would drop to zero if those humans were removed. We get

so the average cost per task is a downward facing parabola in p. We need only consider the interval [0, 1] for our problem, since we cannot get more than 100% of the tasks automated by AI, nor less than 0%.

If we start at p = 0, it will be worthwhile for a company to gradually increase the fraction of AI-automated tasks only if the derivative at p = 0 is negative. This translates to

so, for a company to start automating, the constant a -representing the illegibility costs for a given fraction of human presence- has to be less than the savings per task due to AI, i.e.

Whether this happens or not for any given c0 depends on the overall level of illegibility of any given field. When eventually the cost-per-task of AI drops to zero, only the fields where illegibility adds a cost comparable to that of a human salary will stay AI-free.

Interestingly, in this simple model, Gwern’s hunch is vindicated: the derivative at a generic value of p is

so if it is negative in 0 it will stay negative over the whole interval [0, 1] because the term -2ap is never positive. This means that as soon as it is worthwhile to automate some tasks it becomes worthwhile to automate all tasks: humans will be fired en-masse, suddenly.

A caveat: this result hinges on assuming that the level of illegibility is the same for all tasks, and this is unlikely to be true. Also, as employees lose their jobs, cH would be driven down, perhaps reaching a new equilibrium. More generally, it is very simplistic to assume a static world where only cutting costs matters.

At any rate this is a scary little model.

This applies equally well to a nation or even to society as a whole, except for the caveat at the end.

Hi Mario, you're right, it's a scary little model...